No one can deny that health inequities are a global issue. The United States, despite decades of progress, has one of the highest rates of health disparities in the world. Our health care system is deeply flawed, and we must find solutions quickly. Artificial intelligence (AI) is being heralded as a way to democratize access to health care. While the promise of AI is very real, we must temper our optimism with an awareness of the challenges this technology presents to medicine.

African Americans and other communities of color still face severe health disparities. A recent analysis by researchers at the University of Pennsylvania found that many Black women feel themes of medical mistrust, dismissiveness, and health care skepticism when treated in urban emergency rooms. A similar study in Canada recently reported that nearly a third of Black patients reported feelings of racial discrimination when receiving health care.

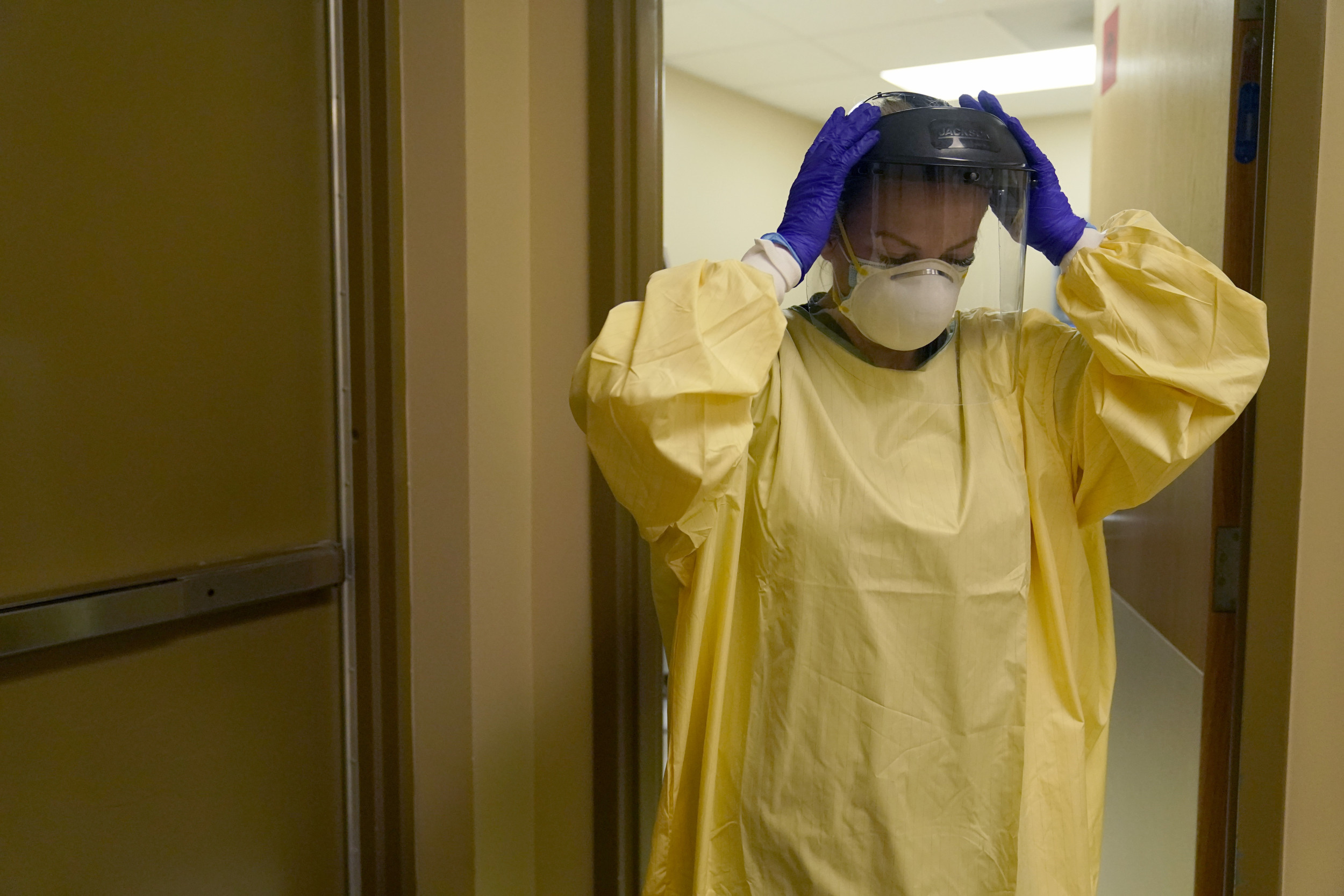

Jeff Roberson/AP Images

Latinos reported obstacles in obtaining quality care as well. Language barriers sometimes mean that people don’t seek help for preventable diseases. Other times, diseases go untreated due to a lack of insurance or mistrust of the system, meaning they suffer disproportionally from HIV/AIDS, Hepatitis C, Hypertension, and Diabetes.

Over the past few decades, targeted solutions have been aimed at minimizing these communities’ health inequities. However, with the rise of artificial intelligence, many are beginning to question how the technology will impact health care or minimize the harmful disparities felt by minority groups.

It’s already widely understood that artificial intelligence will have major implications for the future. Technology is changing how authors write, students learn, and businesses profit. But no one knows for sure whether, in the long-term, AI will ultimately be a tool that aids humanity or one that develops to control us.

AI has become a buzzword in health care when discussing how technology will impact fields such as radiology or pathology. Many are paying close attention to how deep learning algorithms can help radiologists and pathologists better identify and interpret their findings. A recent study by physicians at Johns Hopkins University cited that “roughly 30% of radiologists in the United States are currently utilizing AI within their practice,” a figure that is expected to grow as AI evolves.

What’s less well known is how doctors and other medical professionals can use AI to improve and reduce the barriers in health care that underserved populations feel. In 2024, researchers at Baylor College of Medicine in Texas reported that AI had the potential to help physicians better identify a severe form of peripheral arterial disease called chronic limb-threatening ischemia. This disease affects African Americans, Latinos, and women at a higher rate than other demographics. The researchers postulated that AI algorithms could closely predict the outcome of the disease and increase accurate diagnosis.

While the potential for AI to reduce bias and disparities in health care is seemingly high, scientists have been quick to point out how AI can perpetuate the opposite. In late 2023, a group of researchers from MIT and other institutions published an article describing that the inherent nature in which AI and machine learning algorithms are built can mean biases are amplified. They described how algorithms are created using data from physicians and other health care professionals. This source or input data can contain prejudiced or influenced data.

Another point these researchers made is that inputted data isn’t diverse or inclusive. Health data on skin disease has more samples from whiter skin than darker skin. The same is true for genetic testing, which is typically more available to those of a higher socioeconomic status. When this data is input and amplified through algorithms, systemic bias, and largely non-generalizable information are predicted to spread alarmingly fast to health professionals.

This flawed output can lead to deadly consequences. An example is a recent AI algorithm designed to predict the risk of breast cancer. Data generated from this algorithm incorrectly suggested Black patients were more likely to be designated as “low risk.” This misclassification could lead to delayed or inadequate treatment, potentially worsening health outcomes. It’s a stark reminder of the real-world implications of biased AI in health care.

The next five to 10 years will be critical in terms of how leadership in health care implements emerging technologies. What’s certain is that AI will change the health care landscape. What’s still to be decided is whether it will be a force that enables and promotes inclusivity or compounds existing disparities and reverses our community’s progress over the past decades. As this technology grows exponentially, we must foster continued cross-disciplinary conversations among policymakers, computer scientists, physicians, and most importantly, diverse community members. This collaboration is critical to ensuring that AI is a force for good in health care.

Tamer Rajai Hage is a scientific researcher.

The views expressed in this article are the writer’s own.

link